In this post I show the steps to build and deploy a SlackBot into AWS using Chalice. The bot uses a trained machine learning model to predict the R&D team most able to answer a question that the user has entered. Like so:

The detail on training the machine learning model will be in a later post. For now just know that text from Jira tickets assigned to scrum teams labelled A to I is used to build a classifier so we can predict a team given a question. The trained model is stored in S3.

Serverless hosting

The SlackBot is basically a web server responding to incoming requests via a rest endpoint. You could just run an Internet accessible server somewhere but this seems like a perfect application to run in AWS Lambda; each request is short-lived and stateless. The problem with AWS is there's a lot to learn to put in place all the bits and pieces you need to achieve this: Lambda functions, API Gateway, IAM roles etc. To simplify this, open source tools and frameworks can take care of a lot of this overhead.

AWS Chalice is

...a microframework for writing serverless apps in python. It allows you to quickly create and deploy applications that use AWS Lambda.

I won't repeat here what you can read in the Chalice docs. I'd suggest building a

Hello World rest api to begin with and then following along with this post.

Slack client

To interact with Slack from our Python code we need to use

slackclient. Since our application is already running in the Chalice framework we won't be using a lot of the Slack client framework. In fact it's only used to make the api call to return the response to the question.

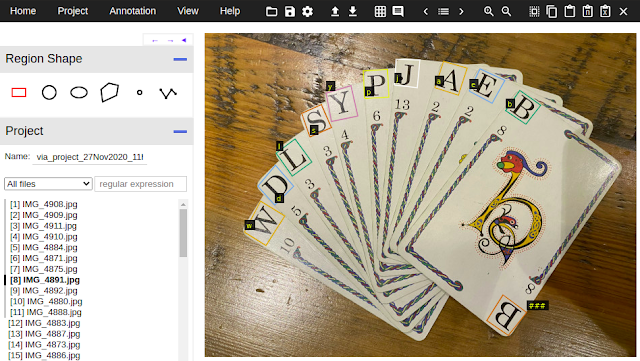

The Bot does need to be registered in your Slack account. I won't

step-by-step through that but here is a screenshot of the critical page showing the configuration of the Event Subscriptions:

You'll see above that there's a Request URL to fill out and in this screenshot it's referring to an AWS location. We haven't got that far yet, there's a little bit of a chicken and egg situation here. We have to build the bot and deploy it via Chalice to get the URL to plug in here. However, in order to deploy that bot we need some info from Slack:

- Basic Information / Verification Token -- (for the SLACK_VERIFICATION_TOKEN)

- OAuth & Permissions / Bot User OAuth Access Token -- (for the SLACK_BOT_TOKEN)

The Code

If you made the Hello World Chalice application you'll be familiar with the main components:

- app.py - where we'll build the slackbot

- .chalice/config.json - where we'll configure the app

- requirements.txt - the python libs we need

This first block of code does some imports and some initialization. The imports refer to some packages which we'll need to bundle up into the deployment zip for the Lambda function. They are declared in the requirements.txt file for Chalice:

Part of the initialization is reading in environment variables. It's good practice to separate these configuration settings from your code. In addition to the Slack tokens mentioned earlier, we also specify the S3 bucket and key for the machine learning model's location. Here's an example config.json file:

There are a couple of other settings in this config file to look at:

lambda_memory_size - I've set this to 1536 MB - note that the memory allocation is used to calculate a proportional CPU power. Since we're loading and running an ML model the default allocation is not sufficient. My strategy is to start reasonably high and then tune down. The cloudwatch logs show the amount of time spent and memory used for each run - this is good information to use when tuning.

manage_iam_role and

iam_role_arn - Chalice will attempt to build an iam role for you with the correct permissions but this doesn't always work. If S3 access has not been granted on the role you can add this in the AWS console and then provide the arn in config.json. You'll also need to set manage_iam_role to false.

Delays

Eagle eyed readers will have noticed that in the cloudwatch log screenshot above there was a big time difference between two runs, one was 10 seconds and the next less than 1 millisecond! The reason for this is to do with the way AWS Lambda works. If the function is "cold", the first run in a new container, then we have to perform the costly process of retrieving the ML model from S3 and loading it into sci-kit learn. For all subsequent calls while the function is "warm" the model will be in memory. We have to code for these "cold" and "warm" states to provide acceptable behaviour.

The code snippet above shows where we load and use the model. load_model() simply checks whether the clf has been initialized. If not it downloads the model from S3 to a /tmp location in the Lambda container and loads it. At the time of writing Lambda provides

512 MB of tmp space so watch the size of your model. While the function is "warm" subsequent calls to load_model() will return quickly without needing to load the model.

You might be wondering why we didn't just load the model in at the top of script. This would only load it in once, and it would do it naturally on the first "cold" run. The problem with this is to do with how Slack interacts with a SlackBot.

The function above is called from Slack whenever there's an incoming event based on the event types we've registered for (screenshot near the top of this post). It's also called during registration as the "challenge" to check that this is the bot matching the registration. The first if statement checks that the incoming token from Slack matches our verification token. Next, if this is a

challenge request, we simply turn around and send the challenge string back.

The third if statement is an interesting one, and a reason why we had to handle the model loading the way we did. In the

event api doc one of the failure conditions is

"we wait longer than 3 seconds to receive a valid response from your server". This also covers the "challenge" request above, so if we loaded the ML model for 10 seconds we wouldn't even be able to register our bot! The doc goes on to say that failures are retried immediately, then after 1 minute and then 5 minutes. This code could more thoroughly deal with various failure scenarios and handle each accordingly but, for simplicity and because this isn't a mission critical app I've written the code to simply absorb retry attempts by immediately returning with a 200 OK. The reason for this is I was finding that my first "cold" run would take longer than the 3 seconds and so a retry would come in. This might even cause AWS to spawn another Lambda function because my first one was still running. Eventually, depending on timing, I might get two or three identical answers in the Slack channel as the runs eventually completed.

So, finally we get onto the bot actually performing the inference over the incoming question and returning a string suggesting the team to contact. This is actually quite straightforward - it looks for the word "who" in the question and then passes the whole string into the predict function to get a classification. Note that load_model() is only called if "who" is found.

Deployment

If you ran through the Chalice Hello World app earlier you will have got to the point where you ran "chalice deploy" and then it did it's magic and created all the AWS stuff for you and you were up and running. This

might work for this SlackBot, but one problem I've run into is the 50MB zip size limit that AWS Lambda imposes. If your deployment zip (all the code and packages) is larger than this limit it'll fail. All is not lost though, for whatever reason if you deploy your zip from S3 rather than as part of your upload you can go beyond this limit.

Chalice provides an alternative to the automatic deployment method allowing you to use

AWS Cloudformation instead. This way of deploying has a side-benefit for this limit problem in that it uses S3 to hold the package for deployment. Calling "chalice package", rather than deploy creates you the zip and the

SAM template for you to then deploy using the AWS CLI. To automate this for my SlackBot I built a bash script:

Registration

Finally, with the bot deployed we can register it with Slack. Remember that Request URL and the challenge process? Take the URL that either "chalice deploy" or "deploy.sh" printed out and add "/slack/events" to it so it looks something like this:

- https://##########.execute-api.us-east-1.amazonaws.com/api/slack/events

Paste this into the request URL form field and Slack will immediately attempt to verify your bot with a challenge request.

We're done!

The Serverless SlackBot is up and running and ready to answer your users questions. In the next post I'll explain how the machine learning model was created with a walkthrough of the code to collect the data from Jira, clean it, train a model on it and deliver it to S3.